Cancer cell line models have been a cornerstone of cancer research for decades. Cancer cell lines profiling can be a powerful tool for the identification of genes’ alterations or pathways cancer-related and for the discovery of putative drug targets. This webinar will focus using Qiagen OmicSoft Lands and Ingenuity Pathway Analysis as guides for the selection of cell lines and translation of insights gained from cell lines for drug target discovery.

This 90-minute event will show how our platform enables scientists:

• Select appropriate cancer cell lines for a variety of applications such as drug discovery, precision disease modeling, understanding gene function in cancer, immune-oncology research.

• Examine various ‘omics data for genes of interest for expression, mutation, hotspots, and gene dependency data.

• Generate networks for hypotheses and test the in-silico to improve translation of insights derived from cell line models to the drug target identification.

• Integrated analyses of public 'omics data and drug response phenotypes using cell line model systems by exploring data from the Library of Integrated Network-Based Cellular Signatures (LINCS).

• How to prioritize drug targets and profile phenotypic/downstream effects of drug action by overlaying public data on user generated networks.

Our system uses millions of curated literature findings in the QIAGEN/ IPA knowledge base and the OmicSoft digital warehouse. The presentation is intended for both those familiar with Ingenuity Pathway and newcomers interested in learning more.

In an era of near-limitless public experimental data but little standardization, meaningful insights are lost to noise. Large collections of quality experimental data are essential for big-picture discoveries that stand up to scrutiny.

In this webinar, you will learn how to feed your drug discovery programs by integrating connections mined from QIAGEN Biomedical Knowledge Base with deeply-curated disease datasets from QIAGEN OmicSoft Lands.

Combining unified 'omics datasets with contextual relationship evidence from our knowledge graph, we will address complex questions such as:

• Which genes aren't expressed in normal tissue, yet are expressed in diseases of interest, based on experimental evidence?

• Which of these proteins are cell surface proteins, with evidence for extracellular localization?

• How are these proteins related directly or indirectly to disease pathways, and can these be connected to known drug targets?

• Can we identify correlated biomarkers, mutation targets, clinical factors or other means of cohort selection?

In an era of near-limitless public experimental data but little standardization, meaningful insights are lost to noise. Large collections of quality experimental data are essential for big-picture discoveries that stand up to scrutiny.

In this webinar, you will learn how to feed your drug discovery programs by integrating connections mined from QIAGEN Biomedical Knowledge Base with deeply-curated disease datasets from QIAGEN OmicSoft Lands.

Combining unified 'omics datasets with contextual relationship evidence from our knowledge graph, we will address complex questions such as:

• Which genes aren't expressed in normal tissue, yet are expressed in diseases of interest, based on experimental evidence?

• Which of these proteins are cell surface proteins, with evidence for extracellular localization?

• How are these proteins related directly or indirectly to disease pathways, and can these be connected to known drug targets?

• Can we identify correlated biomarkers, mutation targets, clinical factors or other means of cohort selection?

Here's the scenario - You're a passionate scientist in a leading pharmaceutical company hoping to uncover a transformative drug candidate. Naturally, you use artificial intelligence (AI) to help you target the most promising leads. After weeks of dedicated work, you start to realize that something seems a little off with your results. Maybe you recognize that the algorithm-proposed drug candidate has a history of poor tolerance in human clinical trials. Or perhaps the drug candidate fails to reproduce even the most basic PK/PD modeling results in vitro. Just like you, many drug discovery researchers have found themselves misled by the results proposed by AI.

Even with state-of-the-art algorithms, outcomes of AI for drug discovery heavily depend on the data and context backing them up. Many researchers, just like you, are seeking ways to navigate the intricacies and challenges of this rapidly evolving field. The path to successful AI-driven drug discovery may appear complex, but with the right guidance, AI can significantly enhance both the efficiency and effectiveness of your drug discovery journey.

Here are 3 of our best secrets to help ensure your success when using AI for drug discovery:

1. Start with quality data

The foundation of any successful AI model lies in the quality of its training data. Inconsistent or noisy biomedical data can introduce biases, potentially making the AI model veer off course. Imagine trying to master a language using an inaccurate dictionary; the outcome would be a garbled mess.

Similarly, training an AI model on low-quality biomedical data can lead to misguided conclusions. Data quality, integrity and relevance are paramount. Using expert-curated databases ensures the model begins with accurate and comprehensive knowledge.

That's where our QIAGEN Biomedical Knowledge Base (BKB) database comes in. Curated by experts and continuously updated, QIAGEN BKB ensures you equip your AI models with the best possible start. It offers a strong foundation for building knowledge graphs and data models. Just as a building's strength depends on its foundation, your AI model's efficacy depends on starting with quality data.

2. Root AI inferences in real biological contexts

The power of AI lies in its ability to process vast amounts of information quickly. But it's worth remembering that an AI model, regardless of its sophistication, doesn't inherently understand the complexities of human biology. It sees numbers, patterns and correlations but not causations.

An AI model might draw associations that, at a glance, seem significant. However, without the biological context, these associations can be misleading. To avoid chasing after false positives, it's crucial to ensure the AI's conclusions are rooted within the biological realities.

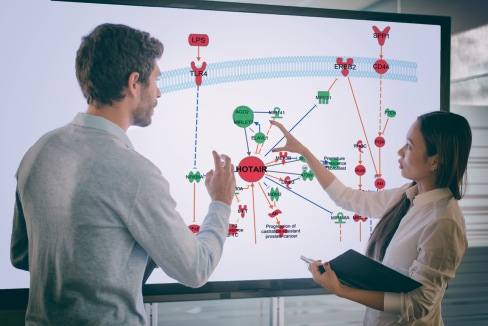

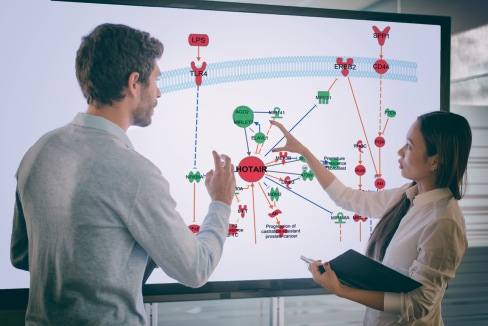

Here's the good news: QIAGEN BKB and QIAGEN Ingenuity Pathway Analysis (IPA) have built-in causality. With IPA you can quickly check the conclusions your AI generates. IPA's intuitive GUI interface provides visual pathways, disease networks, upstream regulators/downstream effects and isoform-level differential expression analysis, all with the ability to bring in primary datasets for custom-tailored analyses.

3. Validate findings with peer-reviewed research

Science, at its core, thrives on collaboration, verification and iteration. A discovery today can be the stepping stone for a revolutionary breakthrough tomorrow. AI can be a potent tool in accelerating these discoveries, but its suggestions need validation.

While using AI for drug discovery can uncover potential candidates, it's essential to validate these findings using published, peer-reviewed studies. Not only does this process lend credibility to your findings, but it also provides invaluable insights. For instance, understanding which cell lines have been used in previous studies can guide your preclinical testing, ensuring you're on the right track.

For this crucial step, QIAGEN OmicSoft's curated omics data collection is your ally, especially for enterprises in need of high-quality multi-omics datasets. You can tap into a comprehensive landscape of sources, offering validation from published studies beyond just a single public repository. Such validation lends credibility to your discoveries and provides invaluable insights. QIAGEN OmicSoft's curated omics data collection facilitates this crucial step, bridging the gap between AI predictions and experimental data to construct disease models and digital twins of cells/organs/organisms.

Validating your cell line selection is also a critical factor for successful preclinical research. Using ATCC Cell Line Land, you can access authenticated cell line ‘omics data to make informed decisions before purchasing cell lines, helping to streamline your workflows, save time and resources, and enhance the predictability and reproducibility of your studies.

You can be confident in steering your research in the right direction with AI, provided you eliminate guesswork and maximize efficiency by using quality biomedical data, ensure biological soundness of AI results and validate your findings. By applying these three powerful tweaks to your AI, you'll surely revolutionize your drug discovery by spotting promising leads much quicker.

We design our QIAGEN Digital Insights knowledge and software with your success in mind.

After all, the future of new therapies is waiting, and we want to ensure you're well-equipped to lead the way. Want to uncover more secrets to drive drug discovery success from our experts?

Continue reading to see how QIAGEN can power your research.

Looking to collaborate further? Fast track your analysis with QIAGEN Discovery Bioinformatics Services.

Are you a researcher or data scientist working in drug discovery? If so, you depend on data to help you achieve unique insights by revealing patterns across experiments. Yet, not all data are created equal. The quality of data you use to inform your research is essential. For example, if you acquire data using natural language processing (NLP) or text mining, you may have a broad pool of data, but at the high cost of a relatively large number of errors (1).

As a drug development researcher, you’re also familiar with freely available datasets from public ‘omics data repositories. You rely on them to help you gain insights for your preclinical programs. These open-source datasets aggregated in portals such as The Cancer Genome Atlas (TCGA) and Gene Expression Omnibus (GEO) contain data from thousands of samples used to validate or redirect the discovery of gene signatures, biomarkers and therapies. In theory, access to so much experimental data should be an asset. But, because the data are unintegrated and inconsistent, they are not directly usable. So in practice, it’s costly, time-consuming and utterly inefficient to spend hours sifting through these portals to find the information required to clean up these data so you can use them.

Data you can use right away

Imagine how transformative it would be if you had direct access to ‘usable data’ that you could immediately understand and work with, without searching for additional information or having to clean and structure it. Data that is comprehensive yet accurate, reliable and analysis-ready. Data you can right away begin to convert into knowledge to drive your biomedical discoveries.

Creating usable data

Data curation has become an essential requirement in producing usable data. Data scientists spend an estimated 80% of their time collecting, cleaning and processing data, leaving less than 20% of their time for analyzing the data to generate insights (2,3). But data curation is not just time-consuming. It’s costly and challenging to scale as well, particularly if legacy datasets must be revised to match updated curation standards.

What if there were a team of experts to take on the manual curation of the data you need so researchers like you could focus on making discoveries?

Our experts have been curating biomedical and clinical data for over 25 years. We’ve made massive investments in a biomedical and clinical knowledge base that contains millions of manually reviewed findings from the literature, plus information from commonly used third-party databases and ‘omics dataset repositories. Our human-certified data enables you to generate insights rather than collect and clean data. With our knowledge and databases, scientists like you can generate high-quality, novel hypotheses quickly and efficiently while using innovative and advanced approaches, including artificial intelligence.

Figure 1. Our workflow for processing 'omics data.

4 advantages of manually curated data

Our 200 dedicated curation experts follow these seven best practices for manual curation. Why do we apply so much manual effort to data curation? Based on our principles and practices for manual curation, here are the top reasons manually curated data is fundamental to your research success:

1. Metadata fields are unified, not redundant

Author-submitted metadata vary widely. Manual curation of field names can enforce alignment to a set of well-defined standards. Our curators identify hundreds of columns containing frequently-used information across studies and combine these data into unified columns to enhance cross-study analyses. This unification is evident in our TCGA metadata dictionary unification is evident in our TCGA metadata dictionary, for example, where we unified into a single field the five different fields that were used to indicate TCGA samples with a cancer diagnosis of a first-degree family member.

2. Data labels are clear and consistent

Unfortunately, it’s common that published datasets provide vague abbreviations as labels for patient groups, tissue type, drugs or other main elements. If you want to develop successful hypotheses from these data, it’s critical you understand the intended meaning and relationship among labels. Our curators take the time to investigate each study and precisely and accurately apply labels so that you can group and compare the data in the study with other relevant studies.

3. Additional contextual information and analysis

Properly labeled data enables scientifically meaningful comparisons between sample groups to reveal biomarkers. Our scientists are committed to expert manual curation and scientific review, which includes generating statistical models to reveal differential expression patterns. In addition to calculating differential expression between sample groups defined by the authors, our scientists perform custom statistical comparisons to support additional insights from the data.

4. Author errors are detected

No matter how consistent data labels are, NLP processes cannot identify misassigned sample groups, and such errors are devastating to data analysis. Unfortunately, it’s not unheard of that data are rendered uninterpretable due to conflicts in sample labeling presented in a publication versus its corresponding entry in a public ‘omics data repository. As shown in Figure 2, for a given Patient ID, both ‘Age’ and ‘Genetic Subtype’ are mismatched between the study’s GEO entry and publication table; which sample labels are correct? Our curators identify these issues and work with authors to correct errors before including the data in our databases.

Figure 2. In this submission to NCBI GEO, the ages of the various patients conflict between the GEO submission and the associated publication. What’s more, the genetic subtype labels are mixed up. Without resolving these errors, the data cannot be used. This attention to detail is required, and can only be achieved with manual curation.

At the core of our curation process, curators apply scientific expertise, controlled vocabularies and standardized formatting to all applicable metadata. The result is that you can quickly and easily find all applicable samples across data sources using simplified search criteria.

Dig deeper into the value of QIAGEN Digital Insights’ manual curation process

Ready to incorporate into your research the reliable biomedical, clinical and ‘omics data we’ve developed using manual curation best practices? Explore our QIAGEN knowledge and databases, and request a consultation to find out how our manually curated data will save you time and enable you to develop quicker, more reliable hypotheses. Learn more about the costs of free data in our industry report and download our unique and comprehensive metadata dictionary of clinical covariates to experience first-hand just how valuable manual curation really is.

References:

If you work in cancer biomarker and target research, chances are you use data from The Cancer Genome Atlas (TCGA) to help you make discoveries. This comprehensive and coordinated effort helps accelerate our understanding of the molecular causes of cancer through genomic analyses, including large-scale genome sequencing. TCGA covers 33 types of cancer with multi-omics data, such as RNA-seq, DNA-seq, copy number, microRNA-seq and others. Detailed analyses of individual TCGA datasets, as well as pan-cancer meta-analysis, have revealed new cancer subtypes with important therapeutic implications. A key value here is the TCGA metadata. TCGA samples include extensive clinical metadata for diverse cancers. However, inconsistent terminology and formatting limit the utility of these data for pan-cancer analyses.

TCGA data within QIAGEN OmicSoft OncoLand is rigorously curated by experts who apply extensive ontologies and formatting rules to maximize consistency. This allows researchers to more easily find and understand patient characteristics, discover related covariates and explore patterns of clinical parameters across cancers in the context of multi-omics data. QIAGEN OmicSoft established strict standards through our curation of over 600,000 disease-relevant ‘omics samples. QIAGEN OmicSoft Lands provide access to uniformly processed datasets, in-depth metadata curation and data exploration tools that enable quick insights from thousands of deeply-curated ‘omics studies across therapeutic areas. QIAGEN OmicSoft Lands centralize data from Gene Expression Omnibus (GEO), NCBI Sequence Read Archive (SRA), ArrayExpress, TCGA, Cancer Cell Line Encyclopedia (CCLE), Genotype-Tissue Expression (GTEx), Blueprint, International Cancer Genome Consortium (ICGC), Therapeutically Applicable Research to Generate Effective Treatments (TARGET) and others.

OmicSoft’s curation process for TCGA

To give you an idea of the extensive time and care QIAGEN curators invest in manual curation of public ‘omics data, they recently spent over 1400 hours performing a comprehensive update of TCGA metadata within QIAGEN OmicSoft OncoLand, reviewing over 1200 source files. Clinical metadata are now comprehensively documented to clarify the meaning of fields in alignment with the latest OmicSoft curation standards. When TCGA metadata fields are redundant or unclear, new field names are used to clarify meaning. In addition, new metadata from recent TCGA publications are matched to TCGA data to apply recent discoveries about molecularly defined cancer subtypes.

At the core of the OmicSoft curation process, curators apply scientific expertise, controlled vocabulary and standardized formatting to all applicable metadata, either as a Fully Controlled Field (key clinical parameters use terms from QIAGEN-defined ontologies) or a Format Controlled Field (where a QIAGEN OmicSoft ontology is not applicable, terms are formatted consistently to maximize uniformity from semi-structured data). This means you can quickly and easily find all applicable samples using simplified search criteria.

Unification of related TCGA metadata fields

With data submissions from dozens of labs, groups adopt inconsistent standards to represent the same data. Where possible, OmicSoft curators identified hundreds of columns containing the same information for various tumors and combined the data into unified columns to enhance pan-cancer analyses and computational analysis. As an example, the cancer diagnosis of a first-degree family member with a history of cancer was captured in TCGA across five fields from four cancers; QIAGEN OmicSoft TCGA curation unites these into the single field “Family History [Cancer] [Type]”.

Synonymous terms and typographical errors

QIAGEN OmicSoft manually applies extensive treatment ontologies to ensure proper and unambiguous labeling of samples with treatment terms. Because of the many submitting groups, different standards were used for well-established terms, such as drug and radiation treatments, with occasional typos escaping submitter quality control checks. For example, over 20 different terms were used to describe treatment with doxorubicin!

Want to learn more about how you can boost your TCGA exploration to get quicker and more meaningful insights?

Read our white paper to get the full details of how QIAGEN OmicSoft OncoLand helps boost TCGA exploration. Download our unique and comprehensive metadata dictionary of clinical covariates to quickly discover the meaning of over 1000 relevant fields for deeper TCGA data exploration across cancers.

Learn more about the costs of free data in our industry report. Check out our infographic that details the various QIAGEN OmicSoft software tools for integrated ‘omics data, to see which solutions should help you transform your biomarker and target discovery.