Are you a researcher or data scientist working in drug discovery? If so, you depend on data to help you achieve unique insights by revealing patterns across experiments. Yet, not all data are created equal. The quality of data you use to inform your research is essential. For example, if you acquire data using natural language processing (NLP) or text mining, you may have a broad pool of data, but at the high cost of a relatively large number of errors (1).

As a drug development researcher, you’re also familiar with freely available datasets from public ‘omics data repositories. You rely on them to help you gain insights for your preclinical programs. These open-source datasets aggregated in portals such as The Cancer Genome Atlas (TCGA) and Gene Expression Omnibus (GEO) contain data from thousands of samples used to validate or redirect the discovery of gene signatures, biomarkers and therapies. In theory, access to so much experimental data should be an asset. But, because the data are unintegrated and inconsistent, they are not directly usable. So in practice, it’s costly, time-consuming and utterly inefficient to spend hours sifting through these portals to find the information required to clean up these data so you can use them.

Data you can use right away

Imagine how transformative it would be if you had direct access to ‘usable data’ that you could immediately understand and work with, without searching for additional information or having to clean and structure it. Data that is comprehensive yet accurate, reliable and analysis-ready. Data you can right away begin to convert into knowledge to drive your biomedical discoveries.

Creating usable data

Data curation has become an essential requirement in producing usable data. Data scientists spend an estimated 80% of their time collecting, cleaning and processing data, leaving less than 20% of their time for analyzing the data to generate insights (2,3). But data curation is not just time-consuming. It’s costly and challenging to scale as well, particularly if legacy datasets must be revised to match updated curation standards.

What if there were a team of experts to take on the manual curation of the data you need so researchers like you could focus on making discoveries?

Our experts have been curating biomedical and clinical data for over 25 years. We’ve made massive investments in a biomedical and clinical knowledge base that contains millions of manually reviewed findings from the literature, plus information from commonly used third-party databases and ‘omics dataset repositories. Our human-certified data enables you to generate insights rather than collect and clean data. With our knowledge and databases, scientists like you can generate high-quality, novel hypotheses quickly and efficiently while using innovative and advanced approaches, including artificial intelligence.

Figure 1. Our workflow for processing 'omics data.

4 advantages of manually curated data

Our 200 dedicated curation experts follow these seven best practices for manual curation. Why do we apply so much manual effort to data curation? Based on our principles and practices for manual curation, here are the top reasons manually curated data is fundamental to your research success:

1. Metadata fields are unified, not redundant

Author-submitted metadata vary widely. Manual curation of field names can enforce alignment to a set of well-defined standards. Our curators identify hundreds of columns containing frequently-used information across studies and combine these data into unified columns to enhance cross-study analyses. This unification is evident in our TCGA metadata dictionary unification is evident in our TCGA metadata dictionary, for example, where we unified into a single field the five different fields that were used to indicate TCGA samples with a cancer diagnosis of a first-degree family member.

2. Data labels are clear and consistent

Unfortunately, it’s common that published datasets provide vague abbreviations as labels for patient groups, tissue type, drugs or other main elements. If you want to develop successful hypotheses from these data, it’s critical you understand the intended meaning and relationship among labels. Our curators take the time to investigate each study and precisely and accurately apply labels so that you can group and compare the data in the study with other relevant studies.

3. Additional contextual information and analysis

Properly labeled data enables scientifically meaningful comparisons between sample groups to reveal biomarkers. Our scientists are committed to expert manual curation and scientific review, which includes generating statistical models to reveal differential expression patterns. In addition to calculating differential expression between sample groups defined by the authors, our scientists perform custom statistical comparisons to support additional insights from the data.

4. Author errors are detected

No matter how consistent data labels are, NLP processes cannot identify misassigned sample groups, and such errors are devastating to data analysis. Unfortunately, it’s not unheard of that data are rendered uninterpretable due to conflicts in sample labeling presented in a publication versus its corresponding entry in a public ‘omics data repository. As shown in Figure 2, for a given Patient ID, both ‘Age’ and ‘Genetic Subtype’ are mismatched between the study’s GEO entry and publication table; which sample labels are correct? Our curators identify these issues and work with authors to correct errors before including the data in our databases.

Figure 2. In this submission to NCBI GEO, the ages of the various patients conflict between the GEO submission and the associated publication. What’s more, the genetic subtype labels are mixed up. Without resolving these errors, the data cannot be used. This attention to detail is required, and can only be achieved with manual curation.

At the core of our curation process, curators apply scientific expertise, controlled vocabularies and standardized formatting to all applicable metadata. The result is that you can quickly and easily find all applicable samples across data sources using simplified search criteria.

Dig deeper into the value of QIAGEN Digital Insights’ manual curation process

Ready to incorporate into your research the reliable biomedical, clinical and ‘omics data we’ve developed using manual curation best practices? Explore our QIAGEN knowledge and databases, and request a consultation to find out how our manually curated data will save you time and enable you to develop quicker, more reliable hypotheses. Learn more about the costs of free data in our industry report and download our unique and comprehensive metadata dictionary of clinical covariates to experience first-hand just how valuable manual curation really is.

References:

If you’re a biologist studying gene function to identify new biomarkers or targets, or testing drug metabolism and toxicity, cell lines are your wingman. They provide the foundation for your experiments, helping you predict clinical response or develop new in vitro models of disease subtypes or patient segments. Yet fundamental to the value of a cell line is understanding its origin, genome and gene expression pattern to help you identify optimal cell lines for your preclinical studies.

Unfortunately, cell line misidentification, genomic aberrations and microbial contamination can compromise the value of cell lines for your experiments (1, 2). A further complication is that current ‘omics data repositories do not contain data on many cell lines. If you’re not studying cancer, you might find yourself lost since most data repositories are missing non-oncology cell line data. You’ll often come up empty-handed when searching for datasets on mouse cell lines, too. This means you need research funds not only to acquire cell lines for your experiments but also to sequence and characterize the genes in those cell lines before you can even begin experiments. This ends up consuming a lot of time and resources, slowing down your drug development research.

Build efficiency into your preclinical pipeline with high-quality cell line ‘omics data

If you depend on cell lines to support your drug development pipeline, we’ve got news that will transform your research.

We’re now offering a new tool that will streamline how you obtain and use cell line ‘omics data to plan and design your preclinical experiments. We partnered with ATCC to establish a database of transcriptomic (RNA-seq) and genomic (whole exome sequencing) datasets from the most highly utilized human and mouse cell lines and primary tissues and cells in ATCC’s collection. These include the most common cell lines, as well as novel cell lines that do not have publicly available transcriptomic data, offering unique data you can’t get your hands on elsewhere. This database is ATCC Cell Line Land.

Cell line ‘omics data from a credible source to ensure reliable research results

ATCC offers credible, authenticated and characterized cell lines, primary tissue and primary cells to enable reproducible research results. The advantage of working with ‘omics data from ATCC cell lines is that the data is derived from cell lines cultured in ISO-compliant conditions. This means the data is reliable and comes from pure, uncontaminated samples. The transcriptome or whole genome is then sequenced from the cell line or tissue, and the resulting ‘omics data is processed and curated using our stringent and rigorous methods for ‘omics data curation, structuring and integration.

“Every dataset we produce can be traced to a physical lot of cells in our bio repository. Since there are no questions about reproducibility and traceability of those materials, you end up with maximum data provenance.”

– Jonathan Jacobs, PhD, Senior Director of Bioinformatics, ATCC

Manually curated, integrated cell line ‘omics data

The ATCC Cell Line Land datasets are processed following the same high-quality data standards we apply to all QIAGEN OmicSoft Lands collections of ‘omics data, which integrate datasets from the largest public ‘omics data repositories using controlled vocabularies and extensive manual curation. Metadata for ATCC Cell Line Land datasets include standard culture conditions, extraction protocols, sample preparation and NGS library preparation. This consistency in curation increases confidence and enables flexible integration for bioinformatics projects, including AI/ML applications. You can use the data to answer your key research questions, explore genes of interest and investigate mutations that may be important to your in vitro experiments (Figure 1).

Figure 1. Box plot showing MYC gene expression across different cell lines in the human cell line collection of ATCC Cell Line Land. This example shows how you can use ATCC Cell Line Land to quickly find cell lines with either high or low expression for a gene of interest.

Tell us, we’re listening: What ATCC cell line data is most valuable to you?

The data in ATCC Cell Line Land is continually growing, with quarterly releases to include ‘omics data on 1000 new samples each year. What’s more, the data grows based on what you, as a researcher, need most. Our team takes your requests to prioritize the cell lines you want added to our ATCC Cell Line Land collection, as well as the type of experimental data you want curated and included in the database. This may include compound treatments with IC50 values or stimulations with cytokine measurements or other parameters. Contact us with your ideas.

Get in touch

Luckily, you no longer need to waste time and money dealing with public portals or taking on the sequencing of cell lines yourself. Speed up cell line characterization and efficiently plan your in vitro testing experiments with high-quality, manually curated cell line ‘omics data from ATCC Cell Line Land. Learn more about how ATCC Cell Line Land and our other integrated ‘omics data collections help you quickly glean insights from public ‘omics data. Your focus is cancer research? Explore how ATCC Cell Line Land is an excellent complement to the Cancer Cell Line Encyclopedia (CCLE) data in QIAGEN OmicSoft OncoLand.

Learn more and request a consultation to explore how ATCC Cell Line Land will streamline your in vitro experiments and accelerate your drug discovery. Read this press release to find out more about our partnership with ATCC.

References:

Do you spend hours cleaning up and integrating ‘omics data, yet still feel like you are drowning in it?

‘Omics data is a powerful resource to help drive innovation in biomarker and target discovery. If you work in drug development, you probably explore potential therapies by using ‘omics data to find new targets and indications. Yet it can be daunting to process, integrate and clean the data to make it meaningful. You’re probably working with data from both public portals and your internally-generated sources, each with its own sets of metadata and respective vocabularies. Perhaps it comes from several different drug discovery programs representing various diseases. The sheer volume and heterogeneity within ‘omics datasets can create a discouraging barrier to drawing meaningful conclusions.

Boost your bioinformatics capabilities

Have you ever thought of seeking the expertise and services of an experienced bioinformatics team to extend and speed up your ‘omics data processing capabilities?

QIAGEN Discovery Bioinformatics Services has the tools, knowledge and resources to help you quickly unravel the biology hidden in your ‘omics data. We supplement your workforce with experts from our bioinformatics team, including Ph.D.-qualified scientists, developers and project managers to provide a customized solution for your project. We do everything from secondary analysis services to in-depth analysis of biological data. We also take on high-quality content curation of literature, datasets and pathways. We can even build custom databases, which are specific collections of integrated ‘omics data with manually curated metadata.

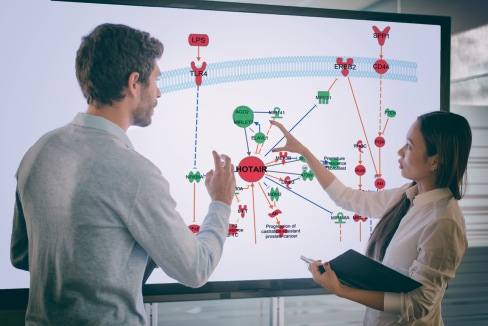

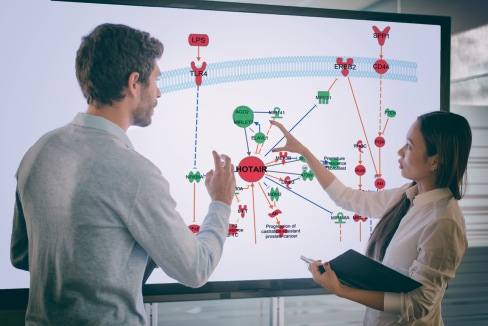

Our services team helps biologists and bioinformaticians like you quickly answer questions relevant to your biomarker and target discovery projects. We do this by using our state-of-the-art software tools and high-quality manually curated content to query your ‘omics data to help answer your hypothesis-generating questions, such as:

Once we run these queries, we can perform deeper meta-analyses on data collections to help you make more accurate hypotheses based on the biological context. Our queries and analyses save you countless hours organizing and visualizing internal pipelines and results, taking you directly down the biological path that makes most sense.

Example service project

A typical transcriptomic project that we take on is processing and storing bulk RNA-seq datasets, including single-cell RNA-seq. Sound familiar? Our QIAGEN Discovery Bioinformatics Services team will create a customized unified pipeline script to process your data and store it in a database framework. We pay special attention to statistical analysis, which can often be tricky when working with transcriptomic data. That’s because this type of data is often generated from heterogeneous samples composed of multiple cell types, and the counts data from a sample represents the average gene expression across all cell types. This heterogeneity is a major hurdle in statistical analysis. Differences in cell type proportions may prevent or bias the detection of cell type-specific transcriptional programs. To manage such challenges, our services team:

Figure 1. Workflow for processing bulk RNA-seq data.

Quickly get the output you need

On all our projects, we work with you to determine the output and deliverables that best fit your needs. A typical example of what we provide for bulk RNA-seq data processing and storing is:

By working with QIAGEN Discovery Bioinformatics Services on projects like bulk RNA-seq data processing, you'll save time and increase accuracy. We help you quickly prioritize drug targets, biomarkers and compounds so you can readily gain a more robust and insightful understanding of complex diseases, to drive your next discovery.

Would you like to reduce the burden of working with ‘omics data to more quickly reach your next biomarker or target discovery? Could you use support with RNA-seq data processing and analysis? Let QIAGEN Discovery Bioinformatics Services lend a helping hand. Learn more about our range of bioinformatics services to extend and scale your in-house resources with our expertise and tailored bioinformatics services. Contact us today at QDIservices@qiagen.com to get your next project started. Together, we'll tame the 'omics data beast.

With the scientific research community publishing over two million peer-reviewed articles every year since 2012 (1) and next-generation sequencing fueling a data explosion, the need for comprehensive yet accurate, reliable and analysis-ready information on the path to biomedical discoveries is now more pressing than ever.

Manual curation has become an essential requirement in producing such data. Data scientists spend an estimated 80% of their time collecting, cleaning and processing data, leaving less than 20% of their time for analyzing the data to generate insights (2,3). But manual curation is not just time-consuming. It is costly and challenging to scale as well.

We at QIAGEN take on the task of manual curation so researchers like you can focus on making discoveries. Our human-certified data enables you to concentrate on generating insights rather than collecting data. QIAGEN has been curating biomedical and clinical data for over 25 years. We've made massive investments in a biomedical and clinical knowledge base that contains millions of manually reviewed findings from the literature, plus information from commonly-used third-party databases and 'omics dataset repositories. With our knowledge and databases, scientists can generate high-quality, novel hypotheses quickly and efficiently, while using innovative and advanced approaches, including artificial intelligence.

Here are seven best practices for manual curation that QIAGEN's 200 dedicated curation experts follow, which we presented at the November 2021 Pistoia Alliance event.

These principles ensure that our knowledge base and integrated 'omics database deliver timely, highly accurate, reliable and analysis-ready data. In our experience, 40% of public ‘omics datasets include typos or other potentially critical errors in an essential element (cell lines, treatments, etc.); 5% require us to contact the authors to resolve inconsistent terms, mislabeled treatments or infections, inaccurate sample groups or errors mapping subjects to samples. Thanks to our stringent manual curation processes, we can correct such errors.

Our extensive investment in high-quality manual curation means that scientists like you don't need to spend 80% of their time aggregating and cleaning data. We've scaled our rigorous manual curation procedures to collect and structure accurate and reliable information from many different sources, from journal articles to drug labels to 'omics datasets. In short, we accelerate your journey to comprehensive yet accurate, reliable and analysis-ready data.

Ready to get your hands on reliable biomedical, clinical and 'omics data that we've manually curated using these best practices? Learn about QIAGEN knowledge and databases, and request a consultation to find out how our accurate and reliable data will save you time and get you quick answers to your questions.

References:

If you work in cancer biomarker and target research, chances are you use data from The Cancer Genome Atlas (TCGA) to help you make discoveries. This comprehensive and coordinated effort helps accelerate our understanding of the molecular causes of cancer through genomic analyses, including large-scale genome sequencing. TCGA covers 33 types of cancer with multi-omics data, such as RNA-seq, DNA-seq, copy number, microRNA-seq and others. Detailed analyses of individual TCGA datasets, as well as pan-cancer meta-analysis, have revealed new cancer subtypes with important therapeutic implications. A key value here is the TCGA metadata. TCGA samples include extensive clinical metadata for diverse cancers. However, inconsistent terminology and formatting limit the utility of these data for pan-cancer analyses.

TCGA data within QIAGEN OmicSoft OncoLand is rigorously curated by experts who apply extensive ontologies and formatting rules to maximize consistency. This allows researchers to more easily find and understand patient characteristics, discover related covariates and explore patterns of clinical parameters across cancers in the context of multi-omics data. QIAGEN OmicSoft established strict standards through our curation of over 600,000 disease-relevant ‘omics samples. QIAGEN OmicSoft Lands provide access to uniformly processed datasets, in-depth metadata curation and data exploration tools that enable quick insights from thousands of deeply-curated ‘omics studies across therapeutic areas. QIAGEN OmicSoft Lands centralize data from Gene Expression Omnibus (GEO), NCBI Sequence Read Archive (SRA), ArrayExpress, TCGA, Cancer Cell Line Encyclopedia (CCLE), Genotype-Tissue Expression (GTEx), Blueprint, International Cancer Genome Consortium (ICGC), Therapeutically Applicable Research to Generate Effective Treatments (TARGET) and others.

OmicSoft’s curation process for TCGA

To give you an idea of the extensive time and care QIAGEN curators invest in manual curation of public ‘omics data, they recently spent over 1400 hours performing a comprehensive update of TCGA metadata within QIAGEN OmicSoft OncoLand, reviewing over 1200 source files. Clinical metadata are now comprehensively documented to clarify the meaning of fields in alignment with the latest OmicSoft curation standards. When TCGA metadata fields are redundant or unclear, new field names are used to clarify meaning. In addition, new metadata from recent TCGA publications are matched to TCGA data to apply recent discoveries about molecularly defined cancer subtypes.

At the core of the OmicSoft curation process, curators apply scientific expertise, controlled vocabulary and standardized formatting to all applicable metadata, either as a Fully Controlled Field (key clinical parameters use terms from QIAGEN-defined ontologies) or a Format Controlled Field (where a QIAGEN OmicSoft ontology is not applicable, terms are formatted consistently to maximize uniformity from semi-structured data). This means you can quickly and easily find all applicable samples using simplified search criteria.

Unification of related TCGA metadata fields

With data submissions from dozens of labs, groups adopt inconsistent standards to represent the same data. Where possible, OmicSoft curators identified hundreds of columns containing the same information for various tumors and combined the data into unified columns to enhance pan-cancer analyses and computational analysis. As an example, the cancer diagnosis of a first-degree family member with a history of cancer was captured in TCGA across five fields from four cancers; QIAGEN OmicSoft TCGA curation unites these into the single field “Family History [Cancer] [Type]”.

Synonymous terms and typographical errors

QIAGEN OmicSoft manually applies extensive treatment ontologies to ensure proper and unambiguous labeling of samples with treatment terms. Because of the many submitting groups, different standards were used for well-established terms, such as drug and radiation treatments, with occasional typos escaping submitter quality control checks. For example, over 20 different terms were used to describe treatment with doxorubicin!

Want to learn more about how you can boost your TCGA exploration to get quicker and more meaningful insights?

Read our white paper to get the full details of how QIAGEN OmicSoft OncoLand helps boost TCGA exploration. Download our unique and comprehensive metadata dictionary of clinical covariates to quickly discover the meaning of over 1000 relevant fields for deeper TCGA data exploration across cancers.

Learn more about the costs of free data in our industry report. Check out our infographic that details the various QIAGEN OmicSoft software tools for integrated ‘omics data, to see which solutions should help you transform your biomarker and target discovery.

This post is authored by Gnosis Data Analysis I.K.E.

We are proud to announce a new release for BioSignature Discoverer, the plugin specifically devised for identifying collection of biomarkers in omics data.

The new version of the plug-in comes with several important improvements, including:

The new release immediately follows the first scientific publication demonstrating the applicability of BioSignature Discoverer in practice:

Network and biosignature analysis for the integration of transcriptomic and metabolomic data to characterize leaf senescence process in sunflower.

This work employs BioSignature Discoverer for identifying biomarkers characterizing leaf senescence in sunflower plants. The biomarkers allow a net separation across the senescence stages of the plants, and were identified by integratively analyzing transcriptomics and metabolomics information.

Gnosis Data Analysis I.K.E. is a university spin-off of the University of Crete whose mission is empowering companies and research institutions with powerful data analysis solutions and services.

For more details about the BioSignature Discoverer plug-in, please visit the dedicated plug-in page.

For more information about Gnosis Data Analysis, please visit their website.