Before delving into details, it’s vital to understand that automation and manual curation might work with the same data, but they play in completely different tournaments. It’s almost like comparing Formula 1 and NASCAR – they’re both about cars, but their rules, quality, audience, and specifications are entirely different.

When scientists, bioinformaticians, and clinicians look for a somatic mutation resource to support their next-generation sequencing (NGS) data analysis and precision oncology activities, the priorities are accuracy, transparency, and flexibility. Only one database ticks all of these boxes, and that's COSMIC, the Catalogue of Somatic Mutations in Cancer.

On close inspection, it’s clear that COSMIC is the only major player with the scope and breadth to deliver its offerings. Our analysis provides four key reasons why manual curation is the ‘gold standard’ and will maintain that place on the podium for a while yet.

COSMIC deploys high-precision data curation methods. It comprises information from almost 1.5 million cancer samples, manually curated from more than 28,000 peer-reviewed papers by PhD-level experts with decades of experience. These experts perform exhaustive literature searches to select papers from which they reorganize, interpret, standardize, and catalog mutation data, phenotype information, and clinical details. To date, manual curation remains the gold standard for associating genotypic and phenotypic data, as it is most precise and delivers higher quality data.

On the other hand, there are advanced machine learning tools developed to accelerate the process of retrieving variant evidence from scientific literature. They, however, sometimes leave much to be desired in the quality and accuracy of genomic data extracted. For instance, significant error rates when associating variants with the correct genes are observed with the use of crowdsourcing and artificial intelligence (AI) applications. Significant amounts of undetected disease-associated mutations and false-positive article associations are also issues with text-mining approaches.

In precision oncology, quality is far more important than quantity, which is why we choose manual curation over other options.

Natural language processing (NLP) and machine learning (ML) approaches facilitate “seeding” relationships from articles to describe genotypic and phenotypic relationships. While these approaches let AI-driven databases scale the indexing of PubMed articles, they do not provide the necessary precision needed to curate deep, unstructured biological, phenotypic, and complex clinical data, including graphics, full text, and supplementary material.

However, deep, manual curation does. Consequently, human judgment remains critical to analyzing and capturing complex relationships, interactions, and contradictory evidence. The high-touch, human review process COSMIC employs ensures high accuracy, high specificity, relevance, context, and consistency in data.

In COSMIC, every data point is traceable to the source, and data processing is documented. The data sources that feed into COSMIC to characterize cancer samples and mutations include peer-reviewed papers, targeted gene-screening panels, genome-wide screen data, and cancer cell line omics data — all of which users have full access to and can use as preferred.

COSMIC’s cancer histology/phenotype classification system is unique and is the world’s most comprehensive cancer phenotype classification linked to somatic mutations. Asides from loosely following the World Health Organization’s (WHO) classification system, COSMIC goes into more detail and precedes/anticipates approvals to WHO.

COSMIC presents the cancer site and cancer histology separately: e.g. lung/left lower lobe/ns/ns, carcinoma/adenocarcinoma/ns/ns each in 4 levels.

In clinical genomics, data quality standards are high as outputs are only as reliable as the evidence used to obtain them.

Text-mining tools are useful and helpful in identifying relevant information, but to rely solely on them is to leave the door open to misleading or missing critical evidence.

COSMIC shuts, locks, and seals that door. A team of expert variant scientists updates COSMIC three times a year. They constantly manually review biomedical literature to classify variants and harmonize differences in nomenclature across gene transcripts. COSMIC’s experts focus on continuous curation and variant reclassification— never relying on updates from external entities. It is a database suited to deep, accurate, and thorough explorations of human genetics.

All of this is why COSMIC is a vital addition to any precision oncology toolbox.

With over 71 million somatic mutations, COSMIC is the world’s largest expert-curated somatic mutation database trusted by over 20,000 users. Learn more about the industry-leading database here, where you can explore features, watch videos, and request a complimentary demonstration.

Introduction to de novo transcriptome assembly

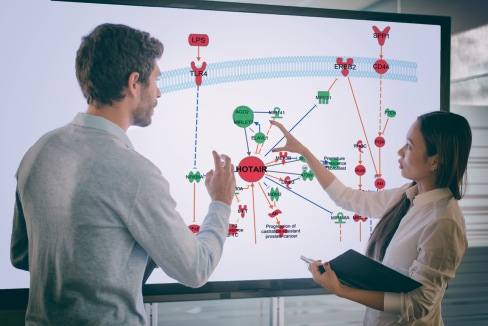

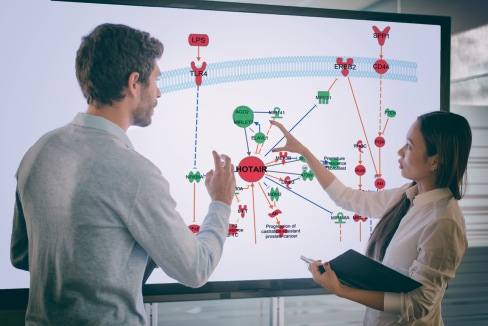

De novo transcriptome assembly using RNA-seq is an efficient way to gather sequence-level information on transcripts, expression levels and SNP identification. It has critical applications in fields such as plant breeding and lncRNA research, where reference genome sequences are absent, incomplete, unfeasible due to tetra- or hexa-ploidity or inadequately annotated. In these cases, an efficient way to gather insights about genomic information is transcriptomics: RNA from various tissues and conditions is extracted followed by reverse-transcription and cDNA synthesis, NGS library preparation and sequencing. Then, de novo assembly of the sequencing reads provides contigs or candidate transcripts. Reads can then be mapped back to the transcriptome to obtain estimates of gene expression levels and variant calling, in addition to enabling the collection of statistics on the read mapping. These statistics serve as quality metrics of the de novo assembly (proportion of reads mapped, broken read pairs, etc.).

Figure 1. RNA-seq workflow for de novo assembly of transcriptomes with applications in plant breeding, lncRNA identification and virome metatranscriptomics.

Applications in plant genomics, lncRNA discovery in zebrafish and virus discovery in metatranscriptomics of the microbiome in peatland

For plant breeders pursuing genotyping-by-sequencing approaches, establishing de novo transcriptome assemblies can be a fruitful strategy as it generates in-depth knowledge of the germplasm transcriptome(s) for selection of parents for new crosses and introgression of novel alleles from exotic germplasms. It is also is a cost-effective way for SNP discovery in breeding programs.

Vendramin et al. (2019) prepared cDNA libraries from four tissues and, after sequencing and removing reads originating from E.coli, mitochondria or chloroplasts, developed de novo assemblies on the pooled total of 384 million reads from these four libraries. QIAGEN CLC and Velvet-Oasis were benchmarked against each other at various parameter settings, and completeness of the assemblies was assessed by comparison with independent samples of wheat genes. To cite the authors: “The assembly performed with CLC with k-mer 64 and all four tissues together was selected due to the best overall features including N50, contig length and total size assembled. […] One of the most commonly used tools, Trinity, was tested as well; however, after a few tests it was abandoned since the results obtained did not significantly improve the quality of the assembly, coupled with an unreasonable request of resources.”

Honaas et al. (2016) conducted a detailed comparison of transcriptome assemblies for Arabidopsis thaliana and Oryza generated using six de novo genome assemblers, including QIAGEN CLC, Trinity, SOAP, Oases, ABySS and NextGENe. A careful evaluation revealed that Trinity, QIAGEN CLC and SOAP de novo-trans assemblers were equivalent and superior to Oases, ABySS and NextGENe.

In a compelling case of inadequate genome annotation, Valenzuela-Muñoz et al. (2019) identified a total of 12,165 putative lncRNA sequences in the zebrafish kidney transcriptome. QIAGEN CLC was used for de novo assembly of RNA-seq data from SVCV-challenged wild-type and rag1 mutants to identify candidate lncRNA involved in innate immunity in vertebrates. This clever approach allows for the identification of non-annotated transcripts with simultaneous measurement of differential gene expression in the conditions of interest. GO terms of nearby protein-coding genes were used to infer gene ontology-by-proxy.

Stough et al. (2018) used metatranscriptomics to describe the diversity and activity of viruses infecting microbes within the Sphagnum peat bog. Extracting RNA from Sphagnum plant stems, reverse transcription, library construction and sequencing was followed by de novo assembly into metatranscriptomes using QIAGEN CLC. Contigs were screened for the presence of conserved virus gene markers. With this elaborate bioinformatics pipeline, the authors identified a treasure trove of new undocumented phages, single-stranded RNA (ssRNA) viruses, nucleocytoplasmic large DNA viruses (NCLDV), virophage or polinton-like viruses, in addition to co-occurrence networks based on expression levels. This innovative approach is likely to be useful for the identification of virus diversity and interactions in understudied clades of the microbiome.

Algorithmic steps in de novo transcript assembly

The de novo assembler of QIAGEN CLC Genomics Workbench makes use of de Bruijn graphs to represent overlapping reads, which is a common approach for short-read de novo assembly that allows efficient handling of a large number of reads. Two parameters govern the de Bruijn graph construction and resolution, which can be adjusted to accommodate assumptions on sequencing errors and repeat sizes. These parameters are k-mer size for graph construction and bubble size from graph resolution.

Figure 2. A bubble caused by a heterozygous SNP or a sequencing error.

Figure 3. The central node represents the repeat region that is represented twice in the genome. The neighboring nodes represent the flanking regions of this repeat in the genome.

To strike a balance between error-induced misassembly in long k-mer assemblies and repeat-induced misassembly in short k-mer assemblies, the default k-mer size is automatically set by the QIAGEN CLC assembler as a function of the total amount of input data: the more reads, the higher the k-mer size. For de novo assembly of 1 Gbp of sequence, the default k-mer size is 22 (see bold line below), and increasing by one for every tripling in input sequence volume.

word size 12: 0 bp - 30000 bp

word size 13: 30001 bp - 90002 bp

word size 14: 90003 bp - 270008 bp

word size 15: 270009 bp - 810026 bp

word size 16: 810027 bp - 2430080 bp

word size 17: 2430081 bp - 7290242 bp

word size 18: 7290243 bp - 21870728 bp

word size 19: 21870729 bp - 65612186 bp

word size 20: 65612187 bp - 196836560 bp

word size 21: 196836561 bp - 590509682 bp

word size 22: 590509683 bp - 1771529048 bp

word size 23: 1771529049 bp - 5314587146 bp

word size 24: 5314587147 bp - 15943761440 bp

word size 25: 15943761441 bp - 47831284322 bp

word size 26: 47831284323 bp - 143493852968 bp

word size 27: 143493852969 bp - 430481558906 bp

word size 28: 430481558907 bp - 1291444676720 bp

word size 29: 1291444676721 bp - 3874334030162 bp

word size 30: 3874334030163 bp - 11623002090488 bp

etc.

With high-quality short reads such as Illumina reads, long k-mers are preferred, as this maximizes repeat resolution without being prone to error-induced bubbles, as shown in Figure 2. The maximum allowed QIAGEN CLC assembler k-mer size is 64. This limit has been added, as gains in terms of assembly quality with k-mers larger than 64 will be marginal, whereas computational requirements (memory, speed and space) become prohibitive.

Resources for de novo transcript assembly

The de novo assembly algorithm is a generic tool in the QIAGEN CLC Genomics Workbench and is equally applicable to transcript and genome assemblies. QIAGEN CLC Workbenches come with ready-to-use resources for reference (the manual) and quick start (the tutorial), in addition to detailed discussions in the form of whitepapers and application notes.

The de novo assembly section of the QIAGEN CLC Genomics Workbench manual contains a 'best practices' section on how to obtain the best results by 1) preparing high-quality input data and monitoring quality control output, 2) setting parameters of the de novo assembly algorithm, and 3) evaluating the quality of the assembly and refining it.

The de novo assembly tutorial provides a step-by-step guide to performing de novo assembly, using a bacterial genome as an example. In addition to quality-control monitoring and de novo assembly parameter settings, the tutorial explains the value of mapping reads back to contig. This allows the interrogation of the read mapping for 'broken pairs', or paired-end reads that have the same original molecule in the library, but end up in separate contigs, indicating problems with those contigs.

The CLC de novo assembly whitepaper contains the details of the implementation of the algorithm, in addition to many benchmarks.

Conclusion:

The de novo assembler tool of the QIAGEN CLC Genomics Workbench is an easy-to-use, versatile and computationally efficient tool with applications in transcript assembly for plant genomics, and discovery of new lncRNAs and RNA viruses.

References:

Honaas, L.A. et al. (2016) Selecting superior de novo transcriptome assemblies: Lessons learned by leveraging the best plant genome. PLoS ONE 11(1): e0146062.

Stough, J. et al. (2018) Diversity of active viral infections within the Sphagnum microbiome. Applied and environmental microbiology 84(23), e01124-18.

Valenzuela-Muñoz, V. et al. (2019) Comparative modulation of lncRNAs in wild-type and rag1-heterozygous mutant zebrafish exposed to immune challenge with spring viraemia of carp virus (SVCV). Sci Rep 9: 14174.

Vendramin, V. et al. (2019) Genomic tools for durum wheat breeding: de novo assembly of Svevo transcriptome and SNP discovery in elite germplasm. BMC Genomics 20: 278.

Many great papers citing CLC Genomics Workbench have come out just in the last few months. To give you an overview of how our software can be used in different types of research, we've selected a handful of papers to be presented here.

Illumina Synthetic Long Read Sequencing Allows Recovery of Missing Sequences even in the “Finished” C. elegans Genome

First author: Runsheng Li

This Nature Scientific Reports paper from scientists at Hong Kong Baptist University and Illumina describes an effort to analyze the C. elegans genome using synthetic long reads, recovering some missing sequence with the new assembly. Researchers used CLC Genomics Workbench to map reads to a reference genome and to analyze differences between the two assemblies.

Generation of whole genome sequences of new Cryptosporidium hominis and Cryptosporidium parvumisolates directly from stool samples

First authors: Stephen Hadfield, Justin Pachebat, Martin Swain

In this BMC Genomics publication, scientists from Singleton Hospital and Aberystwyth University in the UK report a new method for sequencing the whole genome of Cryptosporidium species from human stool samples. This approach is significantly faster and less resource-intensive than current methods to sequence this organism. CLC Genomics Workbench was used to perform bioinformatics analysis and de novo contig assembly.

Are Shiga toxin negative Escherichia coli O157:H7 enteropathogenic or enterohaemorrhagic Escherichia coli? A comprehensive molecular analysis using whole genome sequencing

First author: Mithila Ferdous

Scientists from the University of Groningen and other organizations report in the Journal of Clinical Microbiology a project designed to analyze virulence in E. coli isolates, both with and without the gene encoding Shiga toxin. They used CLC Genomics Workbench to perform de novo assembly of the sequence data.

Bioinformatic Challenges in Clinical Diagnostic Application of Targeted Next Generation Sequencing: Experience from Pheochromocytoma

First author: Joakim Crona

In PLoS One, researchers from Uppsala University and the University of Duisburg-Essen evaluated CLC Genomics Workbench and other bioinformatics tools to assess their reliability for potential use in a clinical setting. They report findings on sensitivity, specificity, read alignment, and more, reporting that these methods were at least as good as Sanger sequencing with earlier analysis tools.

Analysis of conglutin seed storage proteins across lupin species using transcriptomic, protein and comparative genomic approaches

First author: Rhonda Foley

Researchers from CSIRO Agriculture Flagship and the University of Western Australia report in BMC Plant Biology the genomic and transcriptomic analysis of conglutin proteins in lupin seeds during varying stages of development. These proteins are particularly interesting for their nutritional and pharmaceutical benefits. The authors used CLC Genomics Workbench to identify and align conglutin sequences from RNA-seq data.

Try out CLC Genomics Workbench

Did we miss your paper? Please contact us; we would love to feature you!