For those of us working in pharma drug or biomarker discovery, artificial intelligence (AI) plays a vital role in how we collect biological and pharmacological data. It's not only used in each step of the drug design pipeline, it ensures safer and more effective drugs in preclinical trials, while dramatically reducing development costs (1,2).

Yet there's a huge and potentially dangerous disadvantage when using AI-derived data—the question regarding their accuracy.

The unfortunate side effect of AI

Imagine you're a bioinformatician supporting discovery research projects in pharma. You work with biologists on experiments to prioritize leads for further drug development. You do a full analysis of existing data to help define which drug targets have the highest likelihood for therapeutic success. You use an AI-derived knowledge base to pull available 'omics data from a range of dataset repositories, and match that data with your company's internal data.

You analyze the data with your biologist colleagues to generate hypotheses, and define experiments to validate those hypotheses. After six months of costly but failed experiments, you realize something was off in the initial analysis and that your hypotheses were entirely misguided. After backtracking, you discover the AI-derived data were inconsistent in the annotated disease state, resulting in a complete misinterpretation of the data.

Now you've spent half a year, thousands of dollars and countless hours of research on following a dead lead. And your team has nothing to show for it.

AI-derived data: Does it make sense?

In the past few months, you've probably read countless news stories about Chat GPT. It's a powerful tool that uses AI to generate detailed answers to virtually any question you throw at it. Yet, a recognized drawback is that these answers are often factually inaccurate. Try asking it to write your bio, or the bio of your best friend. It will generate a lot of false information, but may appear plausibly factual to people who don't know you or your friend.

Chat GPT is just one example of how AI can be an impressive tool, but one that should be handled with extreme caution. Because, how can you trust insights or hypotheses derived from information that only might be accurate? Or partially accurate? Or worse still, completely inaccurate?

The answer is to couple AI with human-certified, manual curation.

We all recognize the incredible power and potential of AI to collect and bring together seemingly relevant data. Yet, ‘omics and biological relationships data is complex and nuanced and requires context that AI-derived data alone can’t provide.

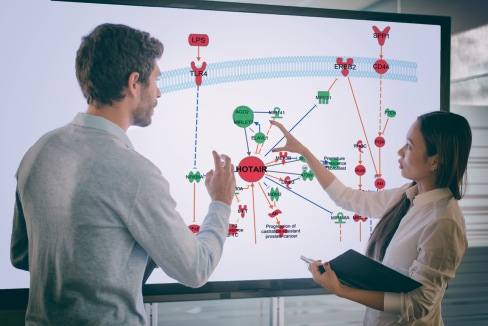

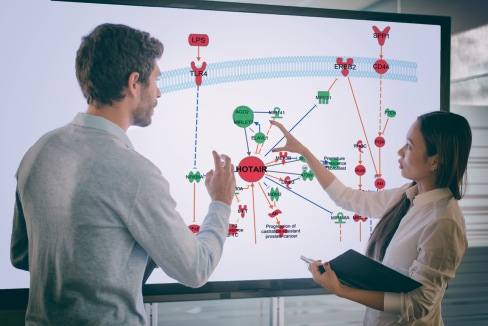

As Figure 1 demonstrates, without the human 'magic touch' of aligning, correcting errors and removing irrelevant data, AI-derived data alone leaves you with a jumble of information that may or may not be accurate, which could send you down a rabbit hole in pursuit of your next biomarker or target discovery.

Figure 1. Decision tree for using AI-derived data.

We're confident that by using our manually curated, human-certified ‘omics data, you’ll quickly gain reliable insights to generate and confirm your hypotheses. We offer you direct access to the most extensive collections of integrated and standardized 'omics and biological relationships data, manually curated by a team of MS- and PhD-certified experts. In short, we find errors and correct them to ensure the data you work with are reliable and accurate.

This means that when you use our manually curated 'omics and biological relationships data, you'll avoid the stressful and frustrating consequences of being led astray by inaccurate data riddled with inconsistencies and errors.

Don't let bad data compromise your projects. And don't waste time fixing and cleaning the data yourself. Get direct access to 'golden' data that deliver true and immediate insights. Ready-to-use, manually curated data that are cleaned of errors and inconsistencies.

“Truth, like gold, is to be obtained not by its growth, but by washing away from it all that is not gold.”

Leo Tolstoy

Tweet

We wash away the 'dirt' so you can mine and collect clean and golden data.

References:

With the scientific research community publishing over two million peer-reviewed articles every year since 2012 (1) and next-generation sequencing fueling a data explosion, the need for comprehensive yet accurate, reliable and analysis-ready information on the path to biomedical discoveries is now more pressing than ever.

Manual curation has become an essential requirement in producing such data. Data scientists spend an estimated 80% of their time collecting, cleaning and processing data, leaving less than 20% of their time for analyzing the data to generate insights (2,3). But manual curation is not just time-consuming. It is costly and challenging to scale as well.

We at QIAGEN take on the task of manual curation so researchers like you can focus on making discoveries. Our human-certified data enables you to concentrate on generating insights rather than collecting data. QIAGEN has been curating biomedical and clinical data for over 25 years. We've made massive investments in a biomedical and clinical knowledge base that contains millions of manually reviewed findings from the literature, plus information from commonly-used third-party databases and 'omics dataset repositories. With our knowledge and databases, scientists can generate high-quality, novel hypotheses quickly and efficiently, while using innovative and advanced approaches, including artificial intelligence.

Here are seven best practices for manual curation that QIAGEN's 200 dedicated curation experts follow, which we presented at the November 2021 Pistoia Alliance event.

These principles ensure that our knowledge base and integrated 'omics database deliver timely, highly accurate, reliable and analysis-ready data. In our experience, 40% of public ‘omics datasets include typos or other potentially critical errors in an essential element (cell lines, treatments, etc.); 5% require us to contact the authors to resolve inconsistent terms, mislabeled treatments or infections, inaccurate sample groups or errors mapping subjects to samples. Thanks to our stringent manual curation processes, we can correct such errors.

Our extensive investment in high-quality manual curation means that scientists like you don't need to spend 80% of their time aggregating and cleaning data. We've scaled our rigorous manual curation procedures to collect and structure accurate and reliable information from many different sources, from journal articles to drug labels to 'omics datasets. In short, we accelerate your journey to comprehensive yet accurate, reliable and analysis-ready data.

Ready to get your hands on reliable biomedical, clinical and 'omics data that we've manually curated using these best practices? Learn about QIAGEN knowledge and databases, and request a consultation to find out how our accurate and reliable data will save you time and get you quick answers to your questions.

References: